Neuromorphic Processor Project (NPP)

NPP is developing theory, architectures, and digital implementations targeting specific applications of deep neural network technology in vision and audition. Deep learning has become the state-of-the-art machine learning approach for sensory processing applications, and this project aims towards real-time, low-power, brain-inspired solutions targeted at full-custom SoC integration. A particular aim of the NPP is to develop efficient data-driven deep neural network architectures that can enable always-on operation on battery-powered mobile devices in conjunction with event-driven sensors.

The project team includes world-leading academic partners in Switzerland, USA, Canada, and Spain. The project is coordinated by the Inst. of Neuroinformatics. The overall PI of the project is Tobi Delbruck.

The NPP partners in Phase 1 included (2015-2018)

- Inst. of Neuroinformatics (INI), UZH-ETH Zurich (T. Delbruck, SC Liu, G Indiveri, M Pfeiffer)

- inilabs (F Corradi)

- Robotics and Technology of Computers Lab, Univ. of Seville (A. Linares-Barranco)

- Inst. of Microelectronics Seville (IMSE-CNM) – (B. Linares-Barranco)

- Montreal Institute of Learning Algorithms (MILA) – Univ. of Montreal (Y Bengio)

- Cornell Univ (R Manohar)

- Arizona State University (J. Seo)

The NPP Phase 2 partners include

- Inst. of Neuroinformatics (INI), UZH-ETH Zurich (T. Delbruck, SC Liu, G Indiveri, M Pfeiffer)

- Montreal Institute of Learning Algorithms (MILA) – Univ. of Montreal (Y Bengio)

- Robotics and Technology of Computers Lab, Univ. of Seville (A. Linares-Barranco)

- Univ. of Toronto (A Moshovos)

- Computer Lab – Cambridge Univ (R Mullins)

In Phase 1 of NPP we worked with partners from Canada, USA, and Spain to develop deep inference theory and processor achitectures with state of the art power efficiency. Several key hardware accelerator results inspired by neuromorphic design principles were obtained by the Sensors group. These results exploit sparsity of neural activiation in space and time to reduce computation and particularly expensive memory access to external memory, which costs hundreds of times more energy that local memory access or arithmetic operations.

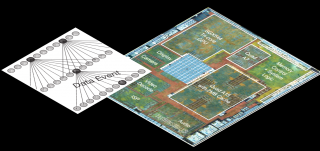

- NullHop uses spatial feature map sparsity to provide flexible convoluttional neural network acceleration that exploits the large amount of sparsity in feature maps resulting from widely-used ReLU activation fuctions.

- DeltaRNN uses temporal change sparsity in for recurrent neural network acceleration that exploits the fact that most of the units in RNNs change slowly.

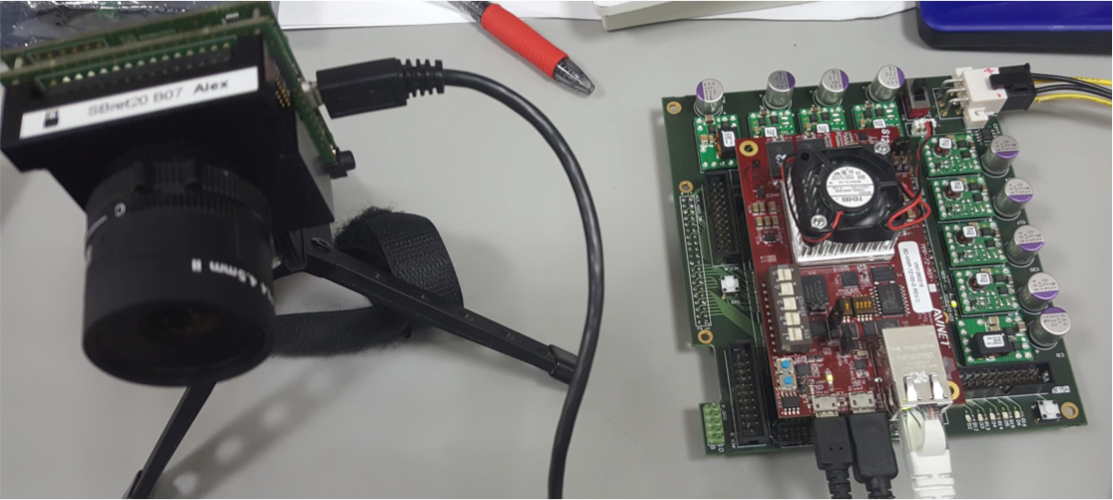

FPGA prototyping of target architectures were successfully developed during phase 1, and they will continue on phase 2. The use of SoC composed by FPGA and ARM cores have supposed a key decision to develop standalone demonstrations for this emerging technology, such as the Roshambo game with an specific CNN developed to make a prediction at 120fps (See https://www.youtube.com/watch?v=FQYroCcwkS0)

Other key results showed that

- Spiking neural networks (SNNs) can achieve equivalent accuracy as conventional analog neural networks even for very deep CNNs such as VGG16 and GoogleNet

- Both CNNs and RNNs can be trained for greatly reduced weight and state precision, resulting in huge savings in memory bandwidth.