Event-based Cognitive Visual and Auditory Sensory Fusion

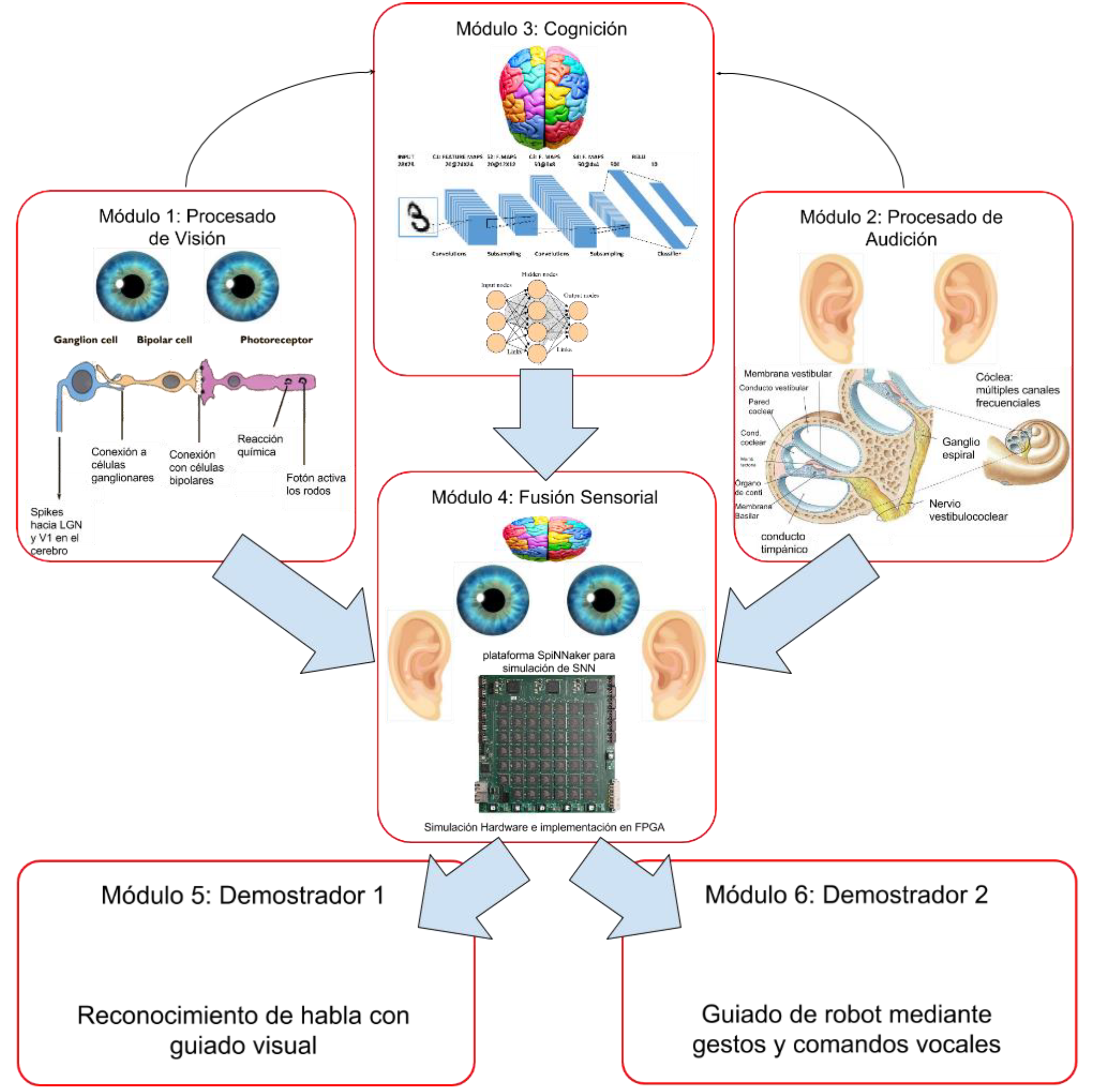

The global goal of the COFNET project is to advance in the theoretical and technological development of neuro-inspired event-based sensing and processing systems and demonstrate its potential to solve practical problems in a more efficient way than conventional technologies do. In particular, in the COFNET project we will address event-based vision and audition sensing processing, event-based vision and audition recognition systems and their training, and the fusion of these sensors information to perform multisensory recognition tasks in real time. In COFNET, we are trying to demonstrate the superior performance of the event-based technology in two practical problems. The first one is visually guided speech recognition in a noisy environment, and the second one is a robot guidance through gesture plus voice commands.

PI: Alejandro Linares Barranco / Ángel Francisco Jiménez Fernándes

Type: Plan Estatal 2013-2016 Excelencia – Proyectos I+D

Reference: TEC2016-77785-P

Funding by: Ministerio de Economía y Competitividad

Start date: 30-12-2016

End date: 29-12-2020

Researchers:

Daniel Cascado Caballero

Elena Cerezuela Escudero

Fernando Díaz del Río

Juan Pedro Domínguez Morales

Manuel Jesús Domínguez Morales

Francisco Gómez Rodríguez

Gabriel Jiménez Moreno

Lourdes Miró Amarante

Rafael Paz Vicente

Fernando Pérez Peña

Manuel Rivas Pérez

Saturnino Vicente Díaz

More Information:

Related posts:

- Deep Neural Networks for the Recognition and Classification of Heart Murmurs Using Neuromorphic Auditory Sensors

- Neuropod: A real-time neuromorphic spiking CPG applied to robotics

- RTC at Fundación DesQbre News

- ISCAS 2017 Live Demonstration – Multilayer Spiking Neural Network for Audio Samples Classification Using SpiNNaker

- NullHop: A Flexible Convolutional Neural Network Accelerator Based on Sparse Representations of Feature Maps

- NAVIS: Neuromorphic Auditory VISualizer Tool

- A Binaural Neuromorphic Auditory Sensor for FPGA: A Spike Signal Processing Approach

- A spiking neural network for real-time Spanish vowel phonemes recognition

- ED-ScorBot

- Inter-spikes-intervals exponential and gamma distributions study of neuron firing rate for SVITE motor control model on FPGA

- Neuro-Inspired Spike-Based Motion: From Dynamic Vision Sensor to Robot Motor Open-Loop Control through Spike-VITE

- Fully neuromorphic system

- Stereo Matching: From the Basis to Neuromorphic Engineering

- ISCAS 2012 Demo